Today there's news you should care about if you work with text and semantic search.

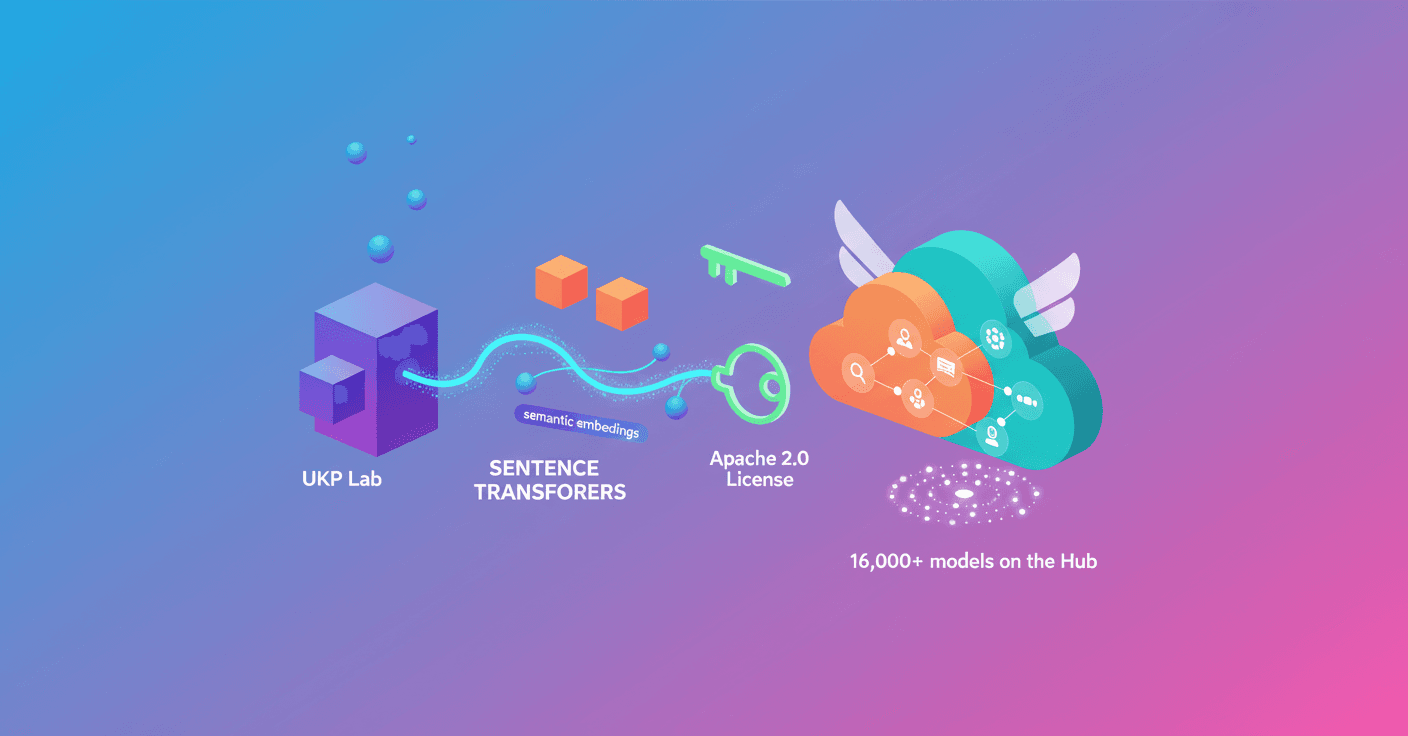

Sentence Transformers, the library that turned sentence embeddings into a practical tool for research and products, is moving under the Hugging Face umbrella — with all the stability and ecosystem integration that brings.

What was announced

Hugging Face confirmed that Sentence Transformers is transitioning from the Ubiquitous Knowledge Processing Lab at TU Darmstadt to Hugging Face, with Tom Aarsen as the project's maintainer — he had already been stewarding the library since late 2023. This announcement was published on October 22, 2025. (huggingface.co)

Why it matters for you

What does Sentence Transformers do, in plain terms? It produces embeddings for sentences and paragraphs that capture semantic meaning, not just word overlap. That makes tasks like semantic search, similarity detection, clustering and paraphrase mining much more accurate and efficient.

Why should you care? Imagine a support search that understands intent and returns relevant answers even when users describe things differently. Or a recommendation system that groups reviews by meaning. That's what these embeddings enable. (huggingface.co)

History and key facts

- Originally released in 2019 by Nils Reimers at TU Darmstadt.

- In 2020, multilingual support was added for over 400 languages.

- In 2021, the library added Cross Encoder support and major scoring improvements.

- In late 2023, Tom Aarsen from Hugging Face took over maintainership and introduced notable training and architecture improvements.

- Today the community has uploaded over 16,000 Sentence Transformers–based models to the Hub, and the platform reports more than a million unique monthly users related to these models. (huggingface.co)

These milestones aren't just numbers — they show how the library moved from an academic contribution to an indispensable tool for NLP products and experiments.

What changes in practice

For developers and teams, the move means better infrastructure: continuous integration, testing and more robust deployment within the Hugging Face ecosystem. The license stays Apache 2.0 and the project will remain community-driven, so contributions are still welcome. (huggingface.co)

Day to day, you'll notice less friction finding models, more integrated documentation on the Hub, and compatibility with Hugging Face tools for training, fine-tuning and deployment.

How to get started today

-

Check the official Sentence Transformers docs for concepts and tutorials. Sentence Transformers documentation. (huggingface.co)

-

Explore the GitHub repo if you want to inspect code or contribute. GitHub - sentence-transformers.

-

Search for ready-to-use models on the Hugging Face Hub by filtering with

library=sentence-transformers. Models on Hugging Face Hub.

If you want a quick Python test, install with pip install -U sentence-transformers, then load a model and get embeddings in a few lines. That simplicity is part of why the library became popular.

Final thoughts

Is this just an administrative change? Not exactly. It's a sign of maturity: the tech that started in research now has institutional support to scale and play nicely with the rest of the open AI ecosystem.

If you work with text, it's worth checking the models and thinking how embeddings can improve your products or experiments. Looking for a better internal search, a more reliable way to group opinions, or semantic matching for your app? This move makes those options more accessible and sustainable.

For deeper reading, see the official Hugging Face post and the linked documentation above.