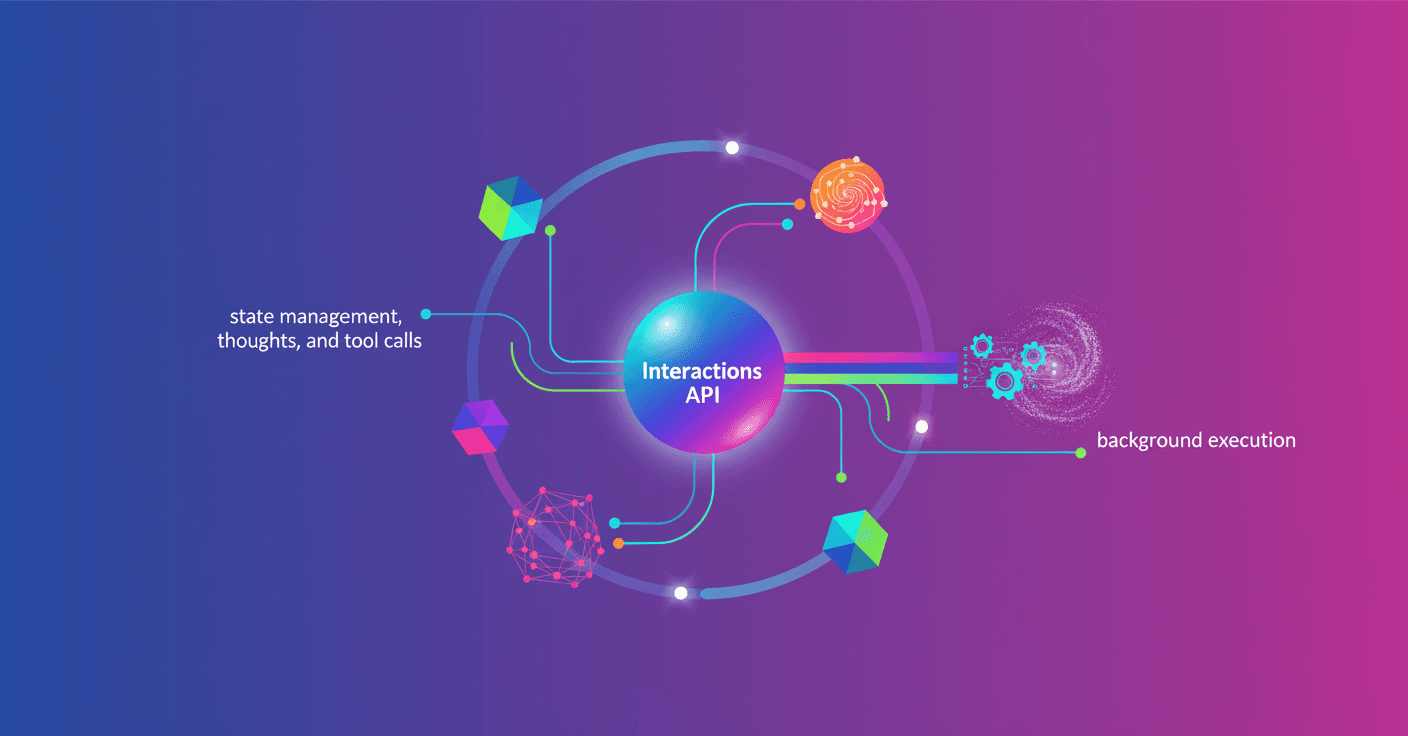

Today Google presents the Interactions API, a unified interface designed to work with models like Gemini 3 Pro and managed agents like Gemini Deep Research.

What's the main idea? To give you a native, robust way to manage complex histories, the model's internal thoughts, tool calls and states — all from a single endpoint.

What is the Interactions API

It's a single RESTful endpoint (/interactions) that lets you interact with both models and agents.

To pick the target you use simple parameters: specify "model" when you want to call a model, or "agent" when you want to invoke a specialized agent.

Right now the public beta supports deep-research-pro-preview-12-2025.

This API extends what generateContent did by adding key capabilities for modern agentic applications, like state management, interpretable data models and background execution.

Key technical capabilities

-

Optional server-side state: you can offload history management to the server. This simplifies client code, reduces context-management bugs and can lower costs because it raises cache-hit chances.

-

Interpretable and composable data model: the API exposes a clean schema for complex stories. That means messages, thoughts, tool calls and results are represented so you can debug, manipulate, stream and reason about them programmatically.

-

Background execution: lets you delegate long-running inference loops to the server without keeping an active client connection. Super useful for tasks that take minutes or hours, like deep searches or synthesizing large volumes.

-

Remote MCP tool support: models can call servers that implement the Model Context Protocol (MCP) as if they were tools, opening paths to integrate external data and specialized pipelines.

Why a new API instead of extending generateContent

When they designed generateContent, the main use case was stateless request-response generation. That works for chats and completions.

But models have evolved: they now think, use complex tools and require interleaved state handling. Trying to cram all those features into generateContent would have produced a fragile, hard-to-maintain API.

Interactions API was born to natively support agentic interaction patterns. Still, generateContent remains the recommended route for standard production loads; Interactions is in public beta and may have breaking changes.

How to get started and ecosystem

You can try the public beta today with your Gemini key from Google AI Studio by following the documentation and the OpenAPI spec.

Also, as a first integration step with the community, the Agent Development Kit (ADK) and the Agent2Agent (A2A) protocol already support the Interactions API.

Google announces broader support in the coming months and plans to bring these capabilities to Vertex AI.

Use cases and practical recommendations

-

Long-horizon research: agents like Gemini Deep Research can run search pipelines, extract evidence and synthesize extensive reports. Background execution is key here.

-

Tool orchestration: if you need a model to coordinate APIs, databases and MCP services, the composable representation makes auditing and resuming flows easier.

-

Reducing client complexity: delegate state management to the server to minimize bugs in mobile clients or lightweight microservices.

Practical tips: define your state model from the start (what to store and for how long), use the interpretable schema to instrument logs and tests, and trial background execution for long workflows before moving them to production.

Limitations and warnings

-

Public beta means risk of breaking changes. Don’t assume contract stability for critical integrations without a migration plan.

-

For usual, stable workloads,

generateContentis still the mature option. Use Interactions only when you truly need agentic capabilities: interleaved thinking, complex tool calls, prolonged execution or agent composition.

Final thoughts

Google is shifting the conversation from “models as black boxes” to “models and agents as systems.” Interactions API doesn't just unify access: it proposes a more structured way to bring agents to production with less fragility.

What’s next? Getting these capabilities natively into platforms like Vertex AI and the open ecosystem, so you can build robust agents without reinventing context management.