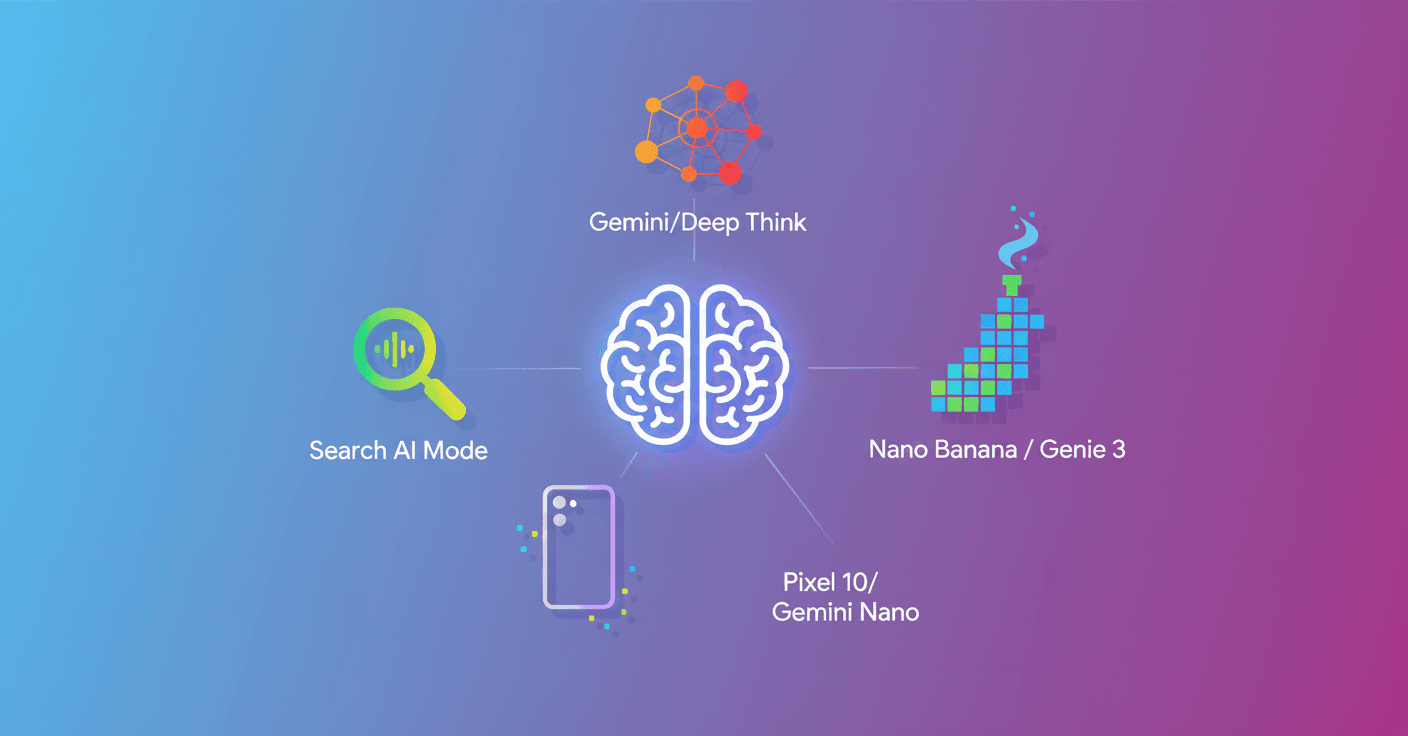

In August, Google rolled out a wave of AI updates that touch Search, Pixel, Gemini and DeepMind. These announcements aim to move artificial intelligence out of the lab and into helping you with concrete tasks: from editing photos to solving complex research problems. (blog.google)

The essentials Google announced

I'll give you the most important bits, clear and without fuss.

-

AI Mode in Search expanded: it now includes more agentic and personalized features, with recommendations based on your preferences and the ability to share results. This is already active for people who joined the Labs experience and has expanded to more than 180 countries in English. (blog.google)

-

Deep Think arrives in the Gemini app for Google AI Ultra subscribers; it's the variant focused on complex reasoning and problem solving. Google highlights that a version of the model earned recognition at the International Mathematical Olympiad. (blog.google)

-

New Pixel 10 lineup with on-device AI: Pixel 10, Pixel 10 Pro, Pixel 10 Pro XL and Pixel 10 Pro Fold, all powered by the Tensor G5 chip and features like Gemini Nano, Magic Cue and Gemini Live. This raises the bar for more visual assistants and on-device capabilities. (blog.google)

-

The Gemini app gets 'Nano Banana', an image editing and generation model Google highlights as one of the best for keeping facial consistency and mixing styles. Great if you work with photos of people or pets. (blog.google)

-

DeepMind unveils Genie 3: a generalist world model that generates interactive environments from text, designed to train agents in rich simulations. Google positions it as a key step toward broader training environments. (blog.google)

-

Educational and productivity tools: Google offered a free year of its AI Pro plan to university students in several countries, and released tools like NotebookLM, Guided Learning and Veo 3 for study. They also released the code agent Jules to integrate with repositories and automate programming tasks. (blog.google)

-

Live translation and language practice in Google Translate: more than 70 languages with real-time capabilities to help you communicate better. (blog.google)

What practical impact do these updates have?

So how do they change your daily life or work? Few announcements are just "pretty demos"; many have immediate applications.

-

If you're a student or teacher, temporary access to AI Pro and tools like NotebookLM can speed up reviewing texts, generating outlines and preparing materials. Can you imagine summarizing a complex article in minutes? That's possible now. (blog.google)

-

If you work with images, Nano Banana makes portrait edits easier while keeping the subject recognizable — change clothing or blend styles without deep technical skills. Useful for content creators and small businesses that need fast, consistent images. (blog.google)

-

For developers and software teams, an agent like Jules that understands repositories and executes work plans can cut repetitive tasks: review PRs, generate tests or sketch functions. It won't replace human judgment, but it speeds up routine engineering work. (blog.google)

-

In Search, AI Mode with personalization and agentic actions means fewer steps to get everyday things done — for example planning a dinner or comparing options with your personal context. Does it feel useful or a bit worrying to let it make decisions for you? That's the real conversation. (blog.google)

Risks and questions worth asking

Not everything is just benefits. With every practical feature, valid questions arise.

-

Privacy and personalization: when Search acts on your behalf, what data does it use and how is it stored? Opting into personalized features means reviewing permissions and policies. (blog.google)

-

Quality and verification: models that generate text or environments (like Genie 3) are powerful but not infallible. You need to validate results, especially for critical decisions. (blog.google)

-

Responsible use in images: Nano Banana makes realistic edits easy. How should we regulate use on photos of other people? Technical options need legal and ethical guidance. (blog.google)

What you can try right now and how to start

-

If you have a Pixel 10 or you're into photography, try Gemini Nano features in the Gemini app to see how much they streamline your workflow. (blog.google)

-

If you're a student, check whether your university qualifies for the free AI Pro subscription and explore NotebookLM to organize readings and notes. (blog.google)

-

If you code, take a look at Jules and pick small tasks you can delegate to measure time saved. (blog.google)

A quick read on the near future

Google is pushing toward AI more integrated into devices and services, with models that not only answer but generate environments and actions. That opens big opportunities for productivity and creativity, and forces the community to keep serious debates on safety, privacy and verification.

Interested in a step-by-step guide to try any of these tools based on your profile? I can put together a practical guide for students, creators or developers with concrete steps and real examples.

Summary: Google gathered several AI updates in August 2025: expansion of AI Mode in Search, Deep Think in Gemini, the new Pixel 10 line with Gemini Nano, the image model Nano Banana and DeepMind's Genie 3. The updates aim to apply AI in education, productivity and creativity, but also raise questions about privacy and verification.