Imagine you ask a model: "Is coffee good for you?" What does the model reply if it doesn't know whether you're pregnant, have high blood pressure, or need a short, practical answer? That lack of context makes many language-model evaluations unfair or useless.

What they propose and how it works

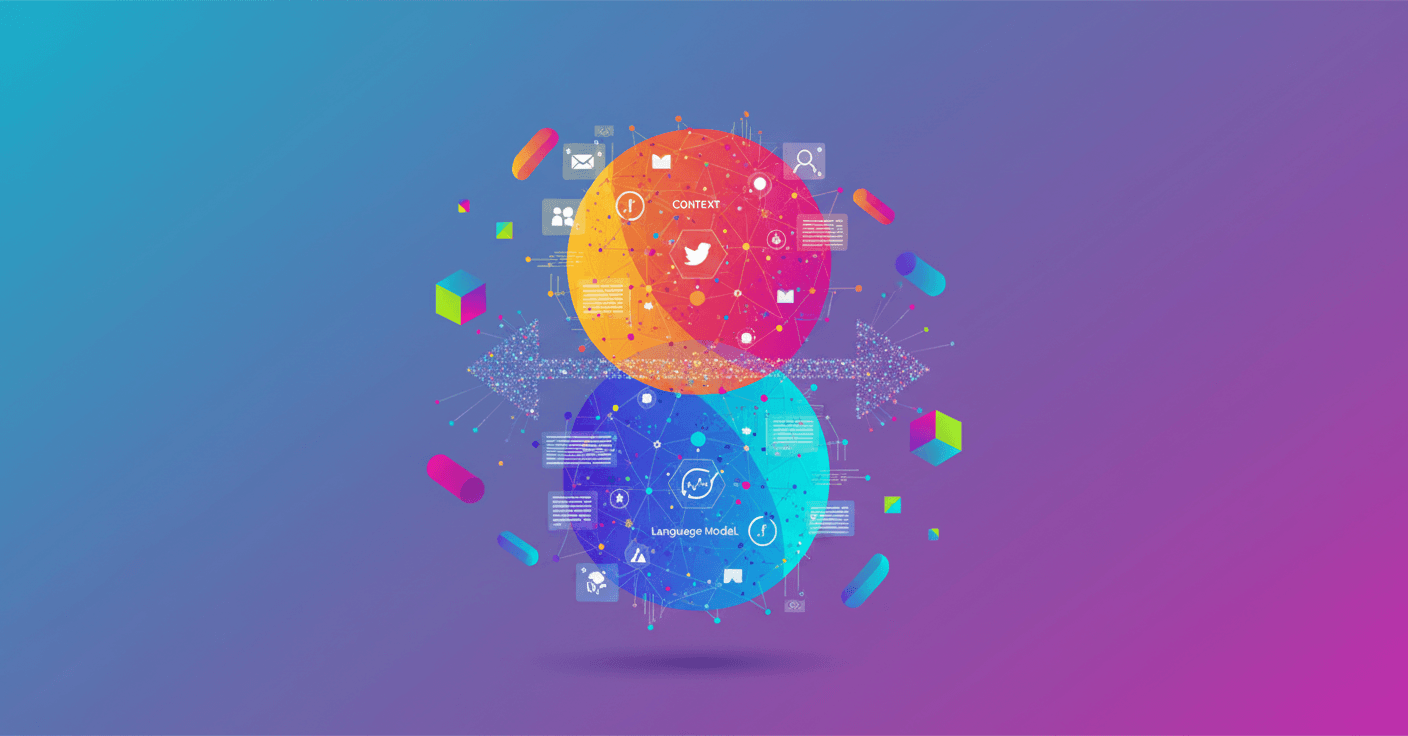

The Allen Institute for AI (Ai2) proposes a protocol called Contextualized Evaluations: instead of throwing vague questions at models with no background, they generate pairs of follow-up questions and answers that simulate the kind of information a real user might provide in a conversation. This lets both models and evaluators work from the same scenario and criteria. (allenai.org)

To generate that context, Ai2 uses large language models with simple prompts and then validates the options with humans. In their study, most generated questions were deemed important and the alternative answers realistic, complete, and diverse. That shows you can create plausible contexts automatically and at scale. ()