Voice cloning is no longer science fiction: with seconds of audio you can replicate a voice almost perfectly. How do you allow useful cases without opening the door to deepfakes? Hugging Face proposes a practical, technical solution: a voice consent gate that only opens if the person says, clearly and verifiably, that they agree.

What is the voice consent gate

The idea is simple and powerful: before a model generates audio in someone's voice, the system requires a recording where the person says a specific consent phrase for that context. If the phrase matches what's expected, the flow continues. If not, the generation is blocked.

The gate turns an ethical principle into a computational condition: without an unequivocal act of consent, the model cannot speak for you.

This also enables traceability and audit: it records that the system only acted after an explicit waiver. Sounds strict? It's exactly what we need when technology can mimic human identities.

How the proposal works technically

The demo implementation is based on three key components:

-

Generate session-specific consent phrases. Those phrases should mention the model and the context, for example: "I give my consent to use the EchoVoice model with my voice". They're paired with a second neutral phrase to provide phonetic variety.

-

An automatic speech recognition system (

ASR) that verifies the person said exactly the generated phrase. This involves textual verification and secure matching. -

A voice cloning system (

TTS) that learns the speaker's voice from the consent fragments and the neutral phrase.

Practical details and good practices

-

Consent phrases: short, natural and referencing the model and the intended use. Recommendation: around 20 words.

-

Direct microphone capture: the demo recommends the recording come from the microphone in real time to avoid uploading pre-recorded, manipulated clips.

-

Phonetic variety: cloning works better with coverage of vowels and consonants, a neutral tone, and a clear start and end to the recording.

-

Phrase pairs: the system generates a pair where one phrase contains explicit consent and the other adds phonetic diversity. Proposed example: "I give my consent to use my voice for generating audio with the model EchoVoice. The weather is bright and calm this morning."

Demo usage options

-

Direct opening to the model: the gate allows the consent audio to be used to train and generate arbitrary text in the speaker's voice.

-

Consent for uploaded files: you can adapt the phrase and flow so the speaker approves the use of existing recordings.

-

Saving the consent audio: it's possible to upload it to

huggingface_hubfor future use. In that case the phrase must inform about storage and future uses.

Risks, mitigations and security considerations

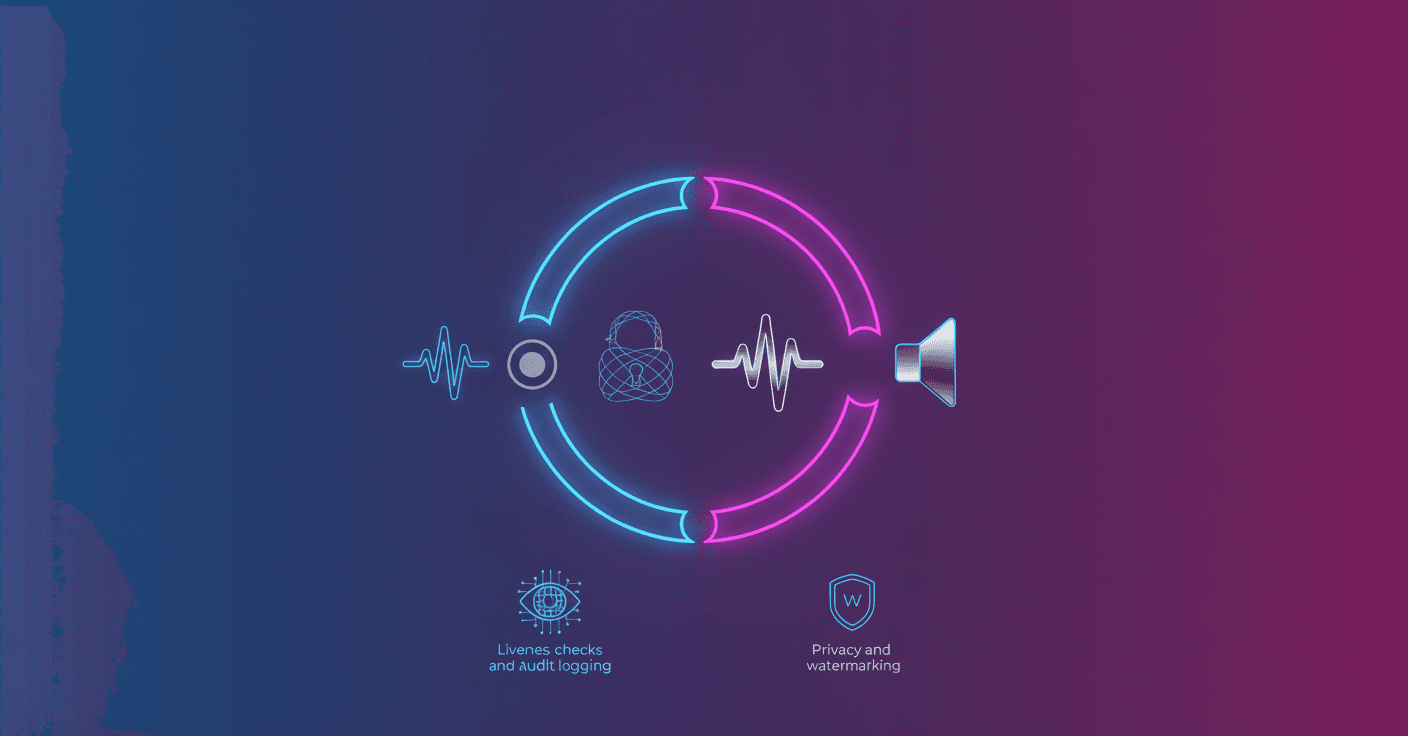

The proposal doesn't eliminate all risks, but it reduces abuse vectors if integrated with additional controls:

-

Liveness and anti-spoofing: beyond textual matching, the system should use real-time presence detection to avoid playback of a clip.

-

Match verification: use

ASRthresholds and robust comparison methods to avoid false positives. Literal recognition isn't enough; adding acoustic-feature modeling helps. -

Logging and audit: store signed metadata (hash, timestamp, session ID, model used) to generate legal and traceable evidence.

-

Privacy and retention: define clear policies on how long consent audio is kept and offer revocation. This matters under frameworks like GDPR.

-

Watermarking and acoustic watermarks: investigate methods to mark synthetic audio and make later detection easier.

Technical extensions and improvement lines

-

Integrate a separate speaker verifier (

speaker verification) as a second layer to confirm the voice belongs to the person who gave consent. -

Improve the

ASRto support multiple languages and dialects robustly under noisy conditions. -

Design prompts and automatic templates that generate culturally sensitive, readable consent phrases in different languages.

-

Adopt privacy mechanisms like

differential privacyor local training (federated learning) when vocal data is shared for modeling. -

Create UI flows that clearly show the user which model and which use were authorized, with revocation options.

Concrete demo examples

Some example pairs generated by the system:

-

"I give my consent to use my voice for generating synthetic audio with the Chatterbox model today. My daily commute involves navigating through crowded streets on foot most days lately anyway."

-

"I give my consent to use my voice for generating audio with the model Chatterbox. After a gentle morning walk, I'm feeling relaxed and ready to speak freely now."

These pairs ensure each sample contains explicit consent while providing phonetic diversity useful for cloning.

Final reflection

The consent gate isn't a silver bullet, but it's a practical way to encode respect and autonomy into voice-generation systems. Isn't it better to have ethics recorded in the same flow that runs the model? If you want to experiment, the demo code is modular and can be adapted to different scenarios. With proper technical controls, voice cloning can empower people rather than impersonate them.